Agenda

The following topics will be discussed

- Introduction

- Endpoint Learning

- ACI Fabric Traffic Forwarding

- References

Introduction

In this post we will talk about how endpoints are learned and how traffic is forwarded in ACI fabric.

Understanding how Endpoints are learned and how traffic is forwarded can greatly simplify troubleshooting process

Endpoint Learning

ACI Endpoint

- An Endpoint consists of one MAC address and zero or more IP addresses

- IP address is always /32

- Each endpoint represents a single networking device

In ACI, there are two types of Endpoints

- Local endpoints for a leaf reside directly on that leaf, these are directly attached network devices.

- Remote endpoints for a leaf reside on a remote leaf

- Both local and remote endpoints are learned from the data plane

- Local endpoints are the main source of endpoint information for the entire Cisco ACI fabric.

- Leaf learns Endpoints (either MAC or/and IP) as local

- Leaf reports local Endpoints to Spine via COOP process

- Spine stores these in COOP DB and synchronize with other Spines

- Spine doesn’t push COOP DB entries to each Leaf. It just receives and stores.

- Remote Endpoints are stored on each Leaf nodes as cache. This is not reported to Spine COOP.

Forwarding tables

In Cisco ACI 3 tables are used to maintain the network addresses of external devices, but these tables are used in a different way than used in traditional network as shown in the following table.

1. RIB Table is the VFR routing table, also known as LPM (Longest Prefix Table). It is populated with:

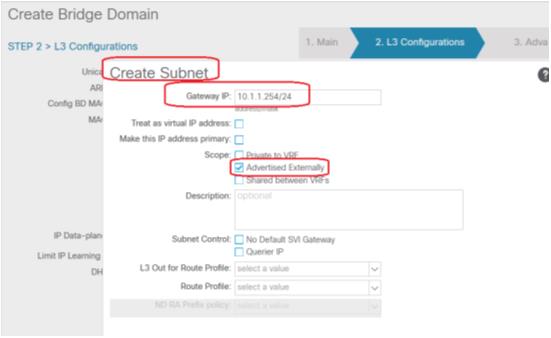

- Internal fabric subnets (non /32 only), these are bridge domain subnets

- External fabric routes (/32 and non /32)

- Static routes (/32 and non /32)

- Bridge domain SVI IP address

- LST (Local Station Table): This table contains local endpoints. This table is populated upon discovery of an endpoint.

- GST (Global Station Table): This is the cache table on the leaf node containing the remote endpoint information that has been learned through active conversations through the fabric.

How Endpoint Endpoints are learned

Cisco ACI learns MAC and IP addresses in hardware by looking at the packet source MAC address and source IP address in the data plane instead of relying on ARP to obtain a next-hop MAC address for IP addresses.

This approach reduces the amount of resources needed to process and generate ARP traffic. It also allows detection of IP address and MAC address movement without the need to wait for GARP as long as some traffic is sent from the new host.

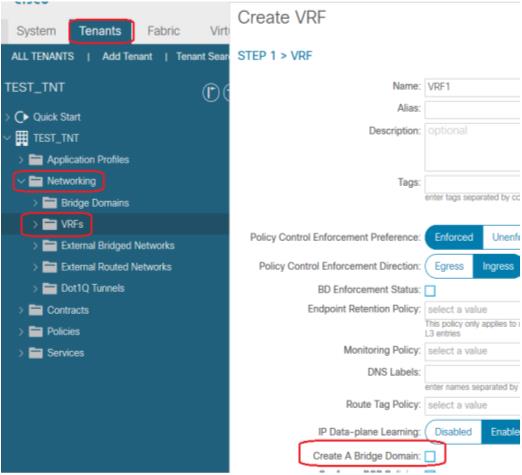

Cisco ACI leaf learns source IP addresses only if unicast routing is enabled on the bridge domain. If unicast routing is not enabled, only source MAC addresses are learnt, and the Leaf performs only L2 switching

Local Endpoint Learning

- Cisco ACI learns the MAC (and IP) address as a local endpoint when a packet comes into a Cisco ACI leaf switch from its front-panel ports.

- Cisco ACI Leaf always learn source MAC address of the received packet

- Cisco ACI Leaf learn source IP address only if the received packet is an ARP packet or a packet that is to be routed (packet destined to SVI MAC)

Remote Endpoint Learning

When a packet is sent from one leaf to another leaf, Cisco ACI encapsulates the original packet with an outer header representing the source and destination leaf Tunnel Endpoint (TEP) and the Virtual Extensible LAN (VXLAN) header, which contains the bridge domain VNID or VRF VNID.

Packets that are switched contain bridge domain VNID. Packets that are routed contain VRF VNID.

- Cisco ACI Leaf learn source MAC address of the received packet from spine switch if VXLAN field contains bridge domain VNID.

- Cisco ACI Leaf learn source IP address of the received packet from spine switch if VXLAN field contains VRF VNID.

Endpoint mouvement and bounce entries

a) At the initial state, the endpoint tables reflect the state of the network

b) When the endpoint A moves to Leaf 103

- Leaf 103 learn about A, when A sends its first packet

- Leaf 103 updates the COOP database on the spine switches with its new local endpoint

- If the COOP database has already learned the same endpoint from another leaf, COOP will recognize this event as an endpoint move and report this move to the original leaf that contained the old endpoint information.

- The old leaf that receives this notification will delete its old endpoint entry and create a bounce entry, which will point to the new leaf. A bounce entry is basically a remote endpoint created by COOP communication instead of data-plane learning.

- Leaf 104 still contains the old location information of endpoint A

c) B sends a packet to A

- As B has not updated yet its Endpoint table about the Endpoint A location, the packet is sent to Leaf 101/Leaf 102

- Because of Bounce bit set for endpoint A, Leaf 101/Leaf 102 bounces the received packet to Leaf 103.

- Leaf 103 update its endpoint table with Endpoint B information

d) A replies to B

- Leaf 104 update its endpoint table with Endpoint A information

ACI Fabric Traffic Forwarding

At the initial state

- Endpoint tables on Leaf 101 and Leaf 106 are empty

- COOP tables on spine switches are empty

- VRF table contains the following information

- 192.168.1.254/24: BD SVI

- 192.168.1.0/24: bridge domain subnet

The following steps described traffic flow from host A to host B

1. Host A sends an ARP request to resolve host B IP to MAC addresses

2. Leaf 101 learn host A source MAC and IP addresses, and notify this information to spine switches through COOP (Council Of Oracle Protocol)

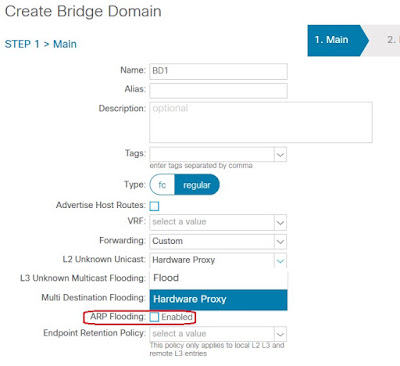

3. One of the following events can happen depending of the setting of ARP Flood option in bridge domain configuration

- If ARP Flooding option is enabled, leaf 101 flood the ARP request inside the bridge domain

- If ARP Flooding option is disabled and Leaf 101 has information about host B IP address. Leaf 101 will send the ARP request to destination Leaf based on ARP target IP field.

- If ARP Flooding option is disabled and Leaf 101 has no information about host B IP address disabled, leaf 101 sends the ARP packet to Spine switches. if spine switch has no information about host B IP address either, it drops the ARP packet and generate a broadcast ARP request form the bridge domain SVI to resolve host B IP to MAC addresses. This process is called ARP Gleaning

4 Host B sends ARP reply to the spine switch. Remember this is a reply to the request generated by the spine

5. Leaf 106 learns host B MAC and IP addresses and notify this information to spine switches

6. Host A sends a second ARP request to resolve host B IP to MAC addresses

7. As leaf 101 doesn’t know host B IP address (ARP target IP address) yet, depending of the setting of ARP Flooding option, it either flood or send the request to the spine. In either case, the ARP request will find its way to host B

8. Host B Sends ARP reply to Leaf 101

9. Leaf 101 learns host B MAC and IP addresses as a remote endpoint and store this information in its endpoint table

10. Host A sends an IP packet to host B

11. Leaf 101 lookup destination MAC address in its endpoint table, a match is found, then it determines if a contract is necessary to forward the frame - if so, it will need to look at the L3/4 contents of the packet to determine if a contract exists.

- If this option is set to Hardware Proxy, leaf 101 send the packet to the spine switches anycast address. If the spine switch doesn’t have information about host B, it drops the packet. This process is called spine-proxy

- If this option is set to Flood, leaf 101 food the packet inside the bridge domain

- Host A sends an IP packet to host B. the Destination MAC address in the packet is the bridge domain SVI MAC address

- Leaf 101 receives the packet, learns host A MAC and IP addresses

- Leaf 101 lookup destination MAC address in its endpoint table, a match with BD SVI MAC address is found, so this is a packet to be routed

- Leaf 101 lookup Longest Prefix Match for IP destination address in its VRF table.

- If a match is found, and the match is an external subnet, the packet is routed to the leaf where VRF L3Out is attached, the policy is applied there based on the contacts applied to the subnet" defined in the Networks section of the L3 Out.

- If a match is found, and the match is a fabric internal subnet (remember, all BDs subnets are in the VRF table), leaf 101 lookup host B /32 IP address in the endpoint table

- If a /32 match is found, it determines the EPG of the destination and apply the policy, then forward the packet to destination Leaf if the packet is permitted

- If no /32 match is found, the packet is sent to the spine. If the spine doesn’t know /32 destination either, it drops the packet and starts ARP Gleaning process for host B /32 IP address

- If no match is found, the packet is dropped

References